Hello friends, I have moved this blog to Substack, which is much easier to use and manage subscriptions. All of the posts from here have been migrated to the new blog, and new posts will only be there. Check it out at https://getsyeducated.substack.com/. You can enter your email to subscribe, getting new posts direct to your inbox--free now, free always. Thanks for reading!....moin

Sunday, December 11, 2022

Thursday, September 8, 2022

Do Registered Reports Take Longer to Publish Than Traditional Articles? The Importance of Identifying the Appropriate Counterfactual

Recently, I attended the annual advisory council meeting for an NSF-funded Ethical & Responsible Research (ER2) project focused on Registered Reports, led by Amanda Montoya and William Krenzer. The project seeks to facilitate uptake of Registered Reports among Early Career Researchers by understanding individual, relational, and institutional barriers to doing so. The first paper from the project has now been published (Montoya et al., 2021), with several more exciting ones on the way. This post is inspired by our conversations during the meeting, and thus I do not lay sole claim to the ideas presented here.

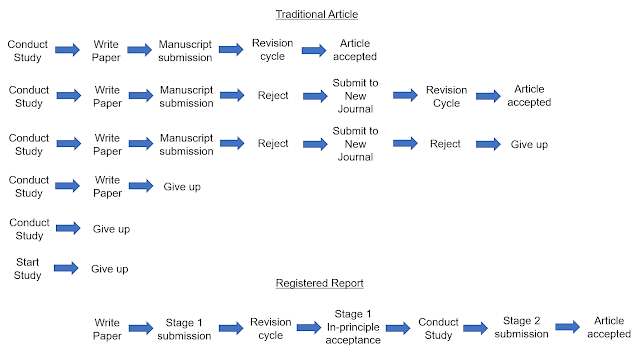

A quick primer on Registered Reports before getting to the point of this post (skip to the next paragraph if you are a know it all): Traditional[1] papers involve the process we are all familiar with, in which a research team develops an idea, conducts the study, analyzes the data, writes up the report, and then submits it for publication. We how have plenty of evidence that this process has not served our science well, as it created a system in which publication decisions are based on the nature of the findings of the study, which has led to widespread problems of p-hacking, HARKing, and publication bias (Munafò et al., 2017). Registered Reports are an intervention to the problems created through the traditional publication process (see Chambers & Tzavella, 2021, for a detailed review). Rather than journals reviewing only the completed study, with the results in hand, Registered Reports break up the publication process into two stages. In Stage 1, researchers submit the Introduction, Method, and Planned Analysis sections—before the data have been collected and/or analyzed. This Stage 1 manuscript is reviewed just as other manuscript submissions are, with the ultimate positive outcome being an In-principle acceptance (IPA). The IPA is a commitment by the journal to publish the manuscript regardless of the results, so long as the authors follow the approved protocol and do so competently. Following the IPA, the researchers conduct the study, analyze the data, and prepare a complete paper, called the Stage 2 manuscript, and resubmit that to the journal for review to ensure adherence to the registered plan and high-quality execution. Whereas publication decisions for traditional articles are made based on the nature of the results, with Registered Reports publication decisions are based on the quality of the conceptualization and study design. This change removes the incentive for researchers to p-hack their results or file-drawer their papers (or for editors and reviewers to encourage such), as publication is not dependent on plunging below the magical p-value of .05. In my opinion, Registered Reports are the single most important and effective reform that journals can implement. So, naturally, it is the reform to which we see the greatest opposition within the scientific community[2].

A recurring topic of conversation at our meeting was about the review time for Registered Reports, and how it compares to publishing traditional papers. Traditional papers have a single review process, whereas with Registered Reports the review process is broken up into two stages. Thus, on first glance it seems obvious that Registered Reports would take longer to conduct because they include two stages of review rather than one, and thus it is no surprise that this is a major concern among researchers.

But is this true? As Amanda stated at our meeting, it is hard to know what the right counterfactual is. That is, the sequence and timing of events for Registered Reports are quite clear and structured, but what are the sequence and timing of events for traditional papers? Until she said that, I hadn’t quite thought about the issue in that way, but then I started thinking about it a lot and came to the conclusion that most people almost certainly have the wrong counterfactual in mind when thinking about Registered Reports.

Based on my conversations and observations, it seems that

most people’s counterfactual resembles what is depicted in Figure 1. Their

starting point for comparison is the point of initial manuscript submission. In

my experience as an Editor, the review time for a Stage 1 submission, and the

number and difficulty of revisions until the paper is issued an in-principle

acceptance (IPA), is roughly equivalent to how long it takes for traditional

papers to be accepted for publication.[3]

Under this comparison, Registered Reports clearly take much longer to publish

because following the IPA, researchers must still conduct the study and submit

the Stage 2 for another round of (typically quicker) review, whereas the

traditional article would have been put to bed.

Figure 1. A commonly believed, but totally wrong, comparison between Registered Reports and traditional articles.

I have no data, but I am convinced that this is what most people are thinking when making the comparison, and it is astonishing because it is so incredibly wrong. Counting time from the point of submission makes no sense, because in one situation the study is completed and in the other it has not yet even begun. To specify the proper counterfactual, we need to account for all of the time that went into conducting and writing up the traditional paper, as well as the time it takes to actually get a journal to accept the paper for publication. And, oh boy, once we start doing that, things don’t look so good for the traditional papers.

In fact, that phase of the process is such a mess and is so variable, it is really not possible to know how much time to allocate. Sure, we could come up with some general estimates, but consider the following:

It is not uncommon to have to submit a manuscript to multiple journals before it is accepted for publication. This is often referred to as “shopping around” the manuscript until it “finds a home.” I know some labs will always start with what they perceive to be the “top-tier” journal in their field and then “work their way down” the prestige hierarchy. In my group we always try to target papers well on initial submission, and just looking at my own papers about a quarter were rejected from at least one journal prior to being accepted. This should all sound very familiar to all researchers, and it is just plain misery.

It is not uncommon for manuscripts to be submitted, rejected, and then go nowhere at all. This problem is well known, as part of the file-drawer problem, where for a variety of reasons completed research never makes it to publication. Sometimes this follows the preceding process, where researchers send their paper to multiple journals, get rejected from all of them, and then give up. I had a paper that received a revise and resubmit at the first journal we submitted it to, but then it was ultimately rejected following the revision. We submitted to another journal, got another revise and resubmit, and then another rejection. This was, of course, extremely frustrating, and so I gave up on the paper. Many years later, one of my co-authors fired us up to submit it to a new journal, and it was accepted…..14 years after I first started working on it. That paper just as easily could have ended up in the file drawer.[4]

It is not uncommon for great research ideas to go nowhere at all. Ideas! We all have great ideas! I get excited about new ideas and new projects all the time. We start new projects all the time. We finish those projects….rarely. I estimate that we have published on less than a third of the projects we have ever started, which includes not only those that stalled out at the conceptualization and/or pilot phase, but also those for which we collected data, completed some data analysis, and maybe even drafted the paper. For some of these, we invested a huge amount of time and resources, but just could not finish them off. Things happen, life happens, priorities change, motivations wane. So it goes.

All of the above is perfectly normal and understandable within the normative context of conducting science that we have created. Accordingly, all of it needs to be considered in any discussion of comparing the timeliness of Registered Reports and traditional papers. Registered Reports do not completely eliminate all of the above maddening situations, but they severely, severely reduce their likelihood of occurrence. Manuscripts are less likely to be shopped around, less likely to be file drawered, and if you get an IPA on your great idea, chances are high you will follow through. We need to acknowledge that the true comparison between the two is not what is depicted in Figure 1, but more like Figure 2, where the timeline for Registered Reports is relatively fixed and known, whereas the timeline for traditional papers is an unknown hot mess.

Figure 2. A more accurate comparison between Registered Reports and traditional articles. Note that the timelines are not quite to scale.

(Edit: a couple of people have commented that the above figure is biased/misleading, because Registered Reports can also be rejected following review, submitted to multiple journals, etc. Of course this is the case, and I indicated previously that Registered Reports do not solve all of these issues. But "shopping around" a Stage 1 manuscript is very different from doing so with a traditional article, where way more work has already been put in. Adding those additional possibilities (of which there are many for both types of articles) does not change the main point that people are making the wrong comparisons when thinking about time to publish the two formats, and that Registered Reports allow you to better control the timeline. See this related post from Dorothy Bishop.)

To be clear, I am not claiming that there are no limitations

or problems with Registered Reports. What I am trying to bring attention to is

the need to make appropriate comparisons when weighing Registered Reports

against traditional articles. Doing so requires us to recognize the true state

of affairs in our research and publishing process. The normative context of

conducting science that we have created is a deeply dysfunctional one, and

Registered Reports have the potential to bringing some order to the chaos.

[1] I

don’t know what to call these. “Traditional” seems to suggest some historical

importance. Scheel et al.

(2021) called them “standard reports,” which I do not like because they

most certainly should not be standard (even if they are). Chambers & Tzavella

(2021) used “regular articles,” which suffers from the same problem. Maybe

“unregistered reports” would fit the bill.

[2]

Over 300 journals have adopted Registered Reports, which sounds great until you

hear that there are at least 20,000 journals.

[3]

Here, I am making a within-Editor comparison: me handling Registered Reports

vs. me handling traditional articles. There are of course wide variations in

Editor and journal behavior that makes comparisons difficult.

[4]

The whole notion of the file drawer is antiquated in the era of preprint

servers, but the reality is that preprints are still vastly underused.

Thursday, July 28, 2022

You’re so Vain, You Probably Think This Article Should Have Cited You

Have you ever been upset because an article didn’t cite you? I have.

When I was a doctoral student and new Assistant Professor, whenever I came across a new article in my research area (mostly racial/ethnic identity, in those days), I would immediately look at the reference list to see if they cited my work. I remember even doing this shortly after I published my first paper, when it was impossible that the paper could be cited any time remotely soon, given the glacial pace of publishing in psychology (this was well before preprints were used in the field). The vast majority of times when I checked if I was cited in an article, I was quite disappointed to find that I was not.

This was frustrating for me. Why weren’t other researchers citing my papers? Why was my work being overlooked, when it was clearly relevant? Was there some bias against me, and/or in favor of others?

Over time, I realized that my reactions were all wrong. Yes, my research was relevant and could have been cited, but I was far from the only person studying racial/ethnic identity. Authors certainly are not going to cite all published papers related to the topic. Even if that was possible—which it is not—that would lead to absurd articles and reference lists. So, authors obviously must be selective in who they cite. Why should they cite me instead of someone else who does related work? If we all believe we should be cited when relevant, that would mean that we believe authors should cite all relevant work. It is clearly a nonsensical position, but one that we are socialized into adopting within the bizarrely insecure world of academic publishing. Citations are currency in the academic world, and money can make us act in strange ways.

There is a phenomenon that I have observed (too often) on social media and at conferences that I refer to as “citation outrage,” or the act of publicly complaining about not being cited in a particular paper. This seems to stem from an inflated sense of our own relevance to other’s published work. Of course, your work could be cited in a whole host of papers, but did it need to be cited? Would the authors arguments, interpretations, or conclusions be any different if they had cited your paper? Chances are, the answer is no, and in such cases, you should probably just relax.

Now that said, it is not the case that all complaints about lack of citation are the same. Far from it.

Sometimes certain work should indisputably be cited. This can take a couple of different forms. It is nearly always advisable to cite the originator of a term or idea, especially if it is relatively recent, i.e., does not have a clear historical precedent and is not part of common knowledge. Additionally, if one’s work is not just related to the topic area, but directly related to the specific study, then yeah, it should almost certainly be included. To return to my early research, if a paper is focused on narratives of race/ethnicity-related experiences, and how those narratives are related to racial/ethnic identity processes, it would be a strange omission to not include articles I published on that exact topic. That is quite different, though, from expecting my work to be cited in any article related to racial/ethnic identity, which is a broad remit. Indeed, some have heard me complain about a time that our work was not cited when it should have been. I gave a talk on a topic that was relatively novel at the time, and had a subsequent discussion about it with a senior researcher who was in the room. They informed me that they were working on a paper that covered similar ground, so I sent them our published work on the topic. About a year later, I saw the paper published in a high-profile outlet with nary a citation to our previous work that was directly related and that which I know they were aware. That was both frustrating and academically dishonest: the authors knowingly omitted references to our papers to make their work appear to be novel.

There are additional structural factors around citations that must be considered. There has been quite a bit of attention recently to citation patterns and representation, particularly in regard to gender and race/ethnicity. Several lines of evidence indicate gender and racial citation disparities across a number of fields (e.g., Chakravartty et al., 2018; Chatterjee & Werner, 2021, Dworkin et al., 2020; Kozlowski et al., 2022), with generally more studies focused on gender than race. As with nearly all social science research, however, this literature is difficult to synthesize due to inconsistent analysis practices and lack of attention to confounds, such as working in different fields, seniority, institutional prestige, and disciplinary differences in authorship order (for a discussion of some of the issues, see Anderson et al., 2019; Dion & Mitchell, 2019; Kozlowski et al., 2022). I have not gone deep enough into all of the studies to arrive at a conclusion about the strength of evidence for these disparities, but I certainly have a strong prior that they are real given the racialized and gendered nature of science, opportunity structures, and whose work is seen as valued[1]. Additionally, we know that researchers can be lazy with their citations, relying on titles or other easily-accessible information rather than reading the papers (Bastian, 2019; Stang et al., 2018). This kind of “familiarity bias” will almost certainly reinforce inequities.

The recognition of these biases and disparities has led to pushes for corrective action, sometimes under the label of “citational justice” (Kwon, 2022) but more generally in terms of being more aware, transparent, and communicative about citation practices (Ahmed, 2017; Zurn et al., 2020). Various tools have been introduced, such as the Gender Balance Assessment Tool (Sumner, 2018) and the Citation Audit Template (Azpeitia et al., 2022), which provide data on the gender or racial background of the authors in a reference list, raising the awareness of authors’ citation patterns and giving them an opportunity to make changes.

To be blunt, I am not a big fan of these tools, what they imply, or the technology that underlies them. I agree that they can be very useful for raising awareness of our citation patterns, as I imagine few have a clear sense for their citation behavior. I am less positive about the possible actions that will come from such tools. They reinforce thinking about diversity in terms of superficial quotas; so long as you cite a reasonably equal number of men and women, or some (unknown) distribution of racial groups, then you have done your deed towards reducing disparities. They also rely on automated methods of name analysis or intensive visual-search strategies that are both highly prone to error. For example, in a widely-discussed—and ultimately retracted—article of over 3 million mentor-mentee pairs, the gender of 48% of authors could not be classified (AlShebli et al., 2020). The challenges of automated classification become ever more difficult when moving beyond the gender binary or attempting to classify based on race or nationality. To be fair, the authors of such tools and those who advocate for them acknowledge the limitations and don’t claim that using them will solve all the problems, but that it is a position that is difficult for people “out there” to resist. These quota-based approaches are the typical kind of quick-fix, minimal effort solutions to addressing disparities that researchers just love. They could be thought of as a form of “citation hacking,” or misuse of citations in service of some goal other than the scientific scope of the paper. They focus on representation—which is not a bad thing—but they don’t at all require that we engage with the substance of the work.

Indeed, whereas of course citations are important and necessary within the academic economy, the larger issue is one of epistemic exclusion (Settles et al. ,2021), the phenomenon of faculty of color’s scholarship being devalued by their White colleagues. The solution to this problem is not citation audits or citation quotas. The solution to this problem is to be more reflective about the work that you engage with, and how it influences your own work. And yes, this includes providing proper credit in the form of citation. The Cite Black Women movement, founded in 2017 by the Black feminist anthropologist Christen A. Smith, is an excellent model for focusing on our practice of reading, appreciating, and acknowledging contributions, rather than on the number or percentages of Black women cited in papers.

So how do we think about all of this together? To be honest, I had only planned to write about citation outrage, but then realized the discussion would be incomplete or confused without including citation justice. At first glance, it may seem like these are the same thing; that is, citation justice is just a more formal type of citation outrage. But this is wrong. Citation justice is seeking to bring attention to the systemic inequities around how we engage with, appreciate, and acknowledge work from marginalized populations within a society stratified by race and gender. Citation outrage is about the irrational sense of entitlement, importance, and relevance that is all too common among academics. I acknowledge that this distinction will be lost on some readers, but in short, one flows from a system of oppression, and the other simply doesn’t.

So then, should that article have cited you? Maybe, maybe not. Probably not. Should you have cited other articles? You always could have, you probably should, and it definitely would be worthwhile to reflect on who you include and why. Again, citations are currency. What should matter more is the substance of the work, but citations impact who gets hired, promoted, awarded, funded, and so on, so it is worth being thoughtful about.

And now, for those of you just here for the Carly Simon:

References

Ahmed, S. (2017). Living a feminist life. Duke University Press.

AlShebli, B., Makovi, K., & Rahwan, T. (2020). RETRACTED ARTICLE: The association between early career informal mentorship in academic collaborations and junior author performance. Nature communications, 11(1), 1-8. https://doi.org/10.1038/s41467-020-19723-8

Andersen, J. P., Schneider, J. W., Jagsi, R., & Nielsen, M. W. (2019). Meta-Research: Gender variations in citation distributions in medicine are very small and due to self-citation and journal prestige. eLife, 8, e45374. https://doi.org/10.7554/eLife.45374

Azpeitia, J., Lombard, E., Pope, T., & Cheryan, S. (2022). Diversifying your references. SPSP 2022 Virtual Workshop; Disrupting Racism and Eurocentrism in Research Methods and Practices.

Bastian, H., (2019). Google Scholar Risks and Alternatives [Absolutely Maybe]. https://absolutelymaybe.plos.org/2019/09/27/google-scholar-risks-and-alternatives/

Chakravartty, P., Kuo, R., Grubbs, V., & McIlwain, C. (2018). # CommunicationSoWhite. Journal of Communication, 68(2), 254-266. https://doi.org/10.1093/joc/jqy003

Chatterjee, P., & Werner, R. M. (2021). Gender disparity in citations in high-impact journal articles. JAMA Network Open, 4(7), e2114509-e2114509. https://doi.org/10.1001/jamanetworkopen.2021.14509

Dion, M. L., & Mitchell, S. M. (2020). How many citations to women is “enough”? Estimates of gender representation in political science. PS: Political Science & Politics, 53(1), 107-113. https://doi.org/10.1017/S1049096519001173

Dworkin, J. D., Linn, K. A., Teich, E. G., Zurn, P., Shinohara, R. T., & Bassett, D. S. (2020). The extent and drivers of gender imbalance in neuroscience reference lists. Nature Neuroscience, 23(8), 918-926. https://doi.org/10.1038/s41593-020-0658-y

King, M. M., Bergstrom, C. T., Correll, S. J., Jacquet, J., & West, J. D. (2017). Men set their own cites high: Gender and self-citation across fields and over time. Socius, 3, 1-22. https://doi.org/10.1177/2378023117738903

Kozlowski, D., Larivière, V., Sugimoto, C. R., & Monroe-White, T. (2022). Intersectional inequalities in science. Proceedings of the National Academy of Sciences, 119(2), e2113067119. https://doi.org/10.1073/pnas.2113067119

Kwon, D. (2022). The rise of citational justice: how scholars are making references fairer. Nature 603, 568-571. https://doi.org/10.1038/d41586-022-00793-1

Settles, I. H., Jones, M. K., Buchanan, N. T., & Dotson, K. (2021). Epistemic exclusion: Scholar(ly) devaluation that marginalizes faculty of color. Journal of Diversity in Higher Education, 14(4), 493–507. https://doi.org/10.1037/dhe0000174

Stang, A., Jonas, S., & Poole, C. (2018). Case study in major quotation errors: a critical commentary on the Newcastle–Ottawa scale. European Journal of Epidemiology, 33(11), 1025-1031. https://doi.org/10.1007/s10654-018-0443-3

Sumner, J. L. (2018). The Gender Balance Assessment Tool (GBAT): a web-based tool for estimating gender balance in syllabi and bibliographies. PS: Political Science & Politics, 51(2), 396-400. https://doi.org/10.1017/S1049096517002074

Zurn, P., Bassett, D. S., &

Rust, N. C. (2020). The citation diversity statement: a practice of

transparency, a way of life. Trends in Cognitive Sciences, 24(9),

669-672. https://doi.org/10.1016/j.tics.2020.06.009

[1] I have

a paper in which I discuss this, but given the evidence for higher

self-citation among men (King

et al., 2017), I will sit this one out.

Tuesday, May 24, 2022

Knowing When to Collaborate….and Knowing When to Run Away

As a graduate student, I once went out to lunch with a new post-doc in our department who had similar research interests to mine. We were having a nice chat about personal and professional topics, and at one point I said, “we should think about writing something together.” This clearly made them uncomfortable, and they said something to the effect of, “let’s wait and see if something relevant comes up.” I was a bit confused at the time, because I thought this is what academics did. I thought that “we should collaborate” is academic-ese for “we should be friends.” After some time, I realized how mistaken I was, and how wise they were to be cautious about entering into an unspecified collaboration with someone they barely knew. Over the years, I have now learned this lesson many times over. The purpose of this post is to share some of those lessons on why we should all be cautious about scientific collaboration.

Collaboration and “team science” are all the rage in psychology these days, which has traditionally valued a singular, “do it all yourself” kind of academic persona. When I was in graduate school, it was clear that the single-authored paper was the ultimate sign of academic greatness. Plenty of people still think that way, but change is certainly afoot, and there are many excellent articles on the benefits and practicalities of collaborative team science (e.g., Forscher et al., 2020; Frassl et al., 2018; Ledgerwood et al., in press; Moshontz et al., 2021).

Amidst the many discussions about the benefits of team science, there is relatively less coverage of potential pitfalls—what to watch out for as you think about collaborating with new people. How do you know whether to engage in a particular collaboration? How can you ensure that the experience is a positive one? A recent column by Carsten Lund Pedersen on How to pick a great scientific collaborator outlines a framework consisting of three traits to ensure success: choose collaborators who are fun to work with, contribute to the work, and have the same ambition. This is a useful and accurate framework, albeit incomplete (e.g., trust is a key aspect of collaborations, especially within cultural and ethnic minority research; see Rivas-Drake et al., 2016), but sometimes you don’t really have sufficient information about these traits of your collaborators until it may already be too late. It is critical to attend to possible warning signs in the earliest phases of a collaboration.

Thus, what brought me to write this entry today: when to run away from any potential collaborations. The following examples are from my personal experience, and so of course does not constitute an exhaustive list, but nevertheless it can be a handy list of the kind of things one should watch out for when establishing new collaborations.

When you receive vague invitations to collaborate. Successful collaborations are nearly always either a) specific to an existing or planned project or b) an extension of an existing collegial relationship. It is not uncommon for people to propose a potential collaboration, via email or in person at conferences, with no additional details about what the collaborative project might be. These are invitations to collaborate on some unknown future project with someone you don’t really know. This is the type of invitation I described making at the outset of this post, and is generally a bad idea to initiate or accept them and a good idea to run away.

When you observe inklings of anti-social behavior. Not long ago, I was asked to be part of a project by someone who I like and respect a great deal on a topic I am enthusiastic about. So far, so good. This person, who was the lead on the paper, shared a 500-word abstract to the authorship group to be submitted for a special issue. Another person on the team, who I did not know at all, responded with an extremely long and detailed email (2608 words long, to be precise) that heavily centered their own work. I wrote back privately to the lead, essentially saying, “count me out of this business.” To me, this was anti-social behavior, but I acknowledge others would have no qualms with it whatsoever. There is no objective standard for what constitutes inappropriate academic behavior of this kind, but if you don’t feel good about it, if something seems off to you, better to jump ship early and save yourself further trouble. The team went on to write a fantastic paper, and when it was published I had a brief tinge of regret, but I know I made the right decision to run away based on my initial feelings.

When you do not want to work with one of the other collaborators. Similar to the previous story, not long ago, I was asked to be part of a project by someone who I like and respect a great deal on a topic I am enthusiastic about. I immediately agreed to be part of the team. When the follow-up email was sent to the full authorship team, however, I saw that one of the other collaborators was someone with a poor history of collaboration, mentorship, and collegiality. I was simply not willing to work with this person. I wrote back to the lead, and regretfully rescinded my involvement, explaining my reasons why. This experience highlighted how you should always find out who else will be involved with a project before agreeing to participate. As I wrote in my email, “I treasure my collaborations and always seek happiness and positivity from the work that I do, and part of that is knowing when something is a bad idea.” If you fear that the collaboration will not bring you happiness, it is best to run away.

When your views are not being respected. Collaborations can be extremely difficult, because we do not all see the world or our disciplines in the same way, and some collaborations involve multiple people who are accustomed to being “in charge.” It can sometimes be impossible to adequately represent everyone’s views. A paper I contributed to involved bringing together multiple groups of people who each had some experience with others on the team, but not everyone had previously worked together. There was a clash of styles in the approach to writing the paper, and one of the authors did not feel that their views and contributions were being respected by the lead author. Accordingly, the author who was not feeling respected decided it was best to cease the collaboration and be removed from the paper. This can be a difficult decision, but it is almost always the correct one. There are many opportunities out there, and if you are not enjoying what you are doing, not feeling respected by your coauthors, and not feeling like you can maintain your integrity through the collaboration, then it is best to just run away.

When you cannot be a good collaborator. My previous warnings focused on other people and their behavior, but sometimes the problem is you. Sometimes, you are just not in a good position to be a productive collaborator. The major culprit here is time, and our tendency to over-extend and take on too much. In recent years, I have taken to thinking really hard about whether I have the time and energy to engage in the collaboration, and try to do so in a realistic way. That is, I no longer fall prey to the fallacy that I will have more time in the future than I do now. That is always false. So, now I frequently decline invitations, or do not pursue opportunities, because I know that I will be a bad collaborator: I won’t respond to emails, I won’t provide comments, I won’t make any of the deadlines. For some projects that I do agree to, I am still clear about my capacity and what I can actually contribute. If you feel that you can participate in a project, but only contribute in a minor capacity, say so up front! That will save a lot of heartache down the road. But, as always, sometimes the wise move is to just run away.

When you want to say what you want to say. I have been involved in a couple of relatively large, big-ego type collaborations that resulted in some published position papers. These collaborations were extremely valuable and constitute some of the major highlights of my career. But the papers we produced were not very good. Team science and diversity of authorship teams have many, many benefits, but it is also difficult to avoid gravitating to the median, centrist view (see Forscher et al., 2020). The result is that the views become too watered-down in order to appease the other co-authors. If you want to argue for something radical, that will often be difficult to do with ten co-authors who also have strong opinions. Sometimes, you just need to go at it on your own, or with a small group of like-minded folks. To be clear, I am not saying that all big collaborations lead to conservative outputs. That is clearly not the case. But it is a risk, and you should assess whether you will be happy with that outcome, or if you should run away.

When you realize there are few things better than lovely collaborators. Ok, this is not a warning sign at all, quite the opposite! I do not want readers to take this post as anti-collaboration. Rather, it is a plea for engaging in highly selective collaborations. I do not want to engage in collaborations that do not bring me happiness. I need to have fun. I need to love the work that I am doing, and I need to love the people I am doing it with. I am fortunate to have three continuous, life-long collaborators in Linda Juang, Kate McLean, and the Gothenburg Group for Research in Developmental Psychology led by Ann Frisén. Working with these folks, and many others—especially current and former students—is among the great joys of my work. Indeed, collaboration can be the highlight of our academic lives, but only if they are done thoughtfully.

There are certainly plenty of other red flags to watch out for or reasons to not collaborate. This is not an exhaustive list, but a few lessons from my own experience. Please share any additional experiences that you have, and perhaps I will update this post, giving you credit of course (hey, a potential collaboration!).

References

Forscher, P. S., Wagenmakers, E., Coles, N. A., Silan, M. A., Dutra, N. B., Basnight-Brown, D., & IJzerman, H. (2020, May 20). The benefits, barriers, and risks of big team science. PsyArXiv. https://doi.org/10.31234/osf.io/2mdxh

Frassl, M. A., Hamilton, D. P., Denfeld, B. A., de Eyto, E., Hampton, S. E., Keller, P. S., ... & Catalán, N. (2018). Ten simple rules for collaboratively writing a multi-authored paper. PLOS Computational Biology, 14(11), e1006508. https://doi.org/10.1371/journal.pcbi.1006508

Ledgerwood, A., Pickett, C., Navarro, D., Remedios, J. D., & Lewis, N. A., Jr. (in press). The unbearable limitations of solo science: Team science as a path for more rigorous and relevant research. Behavioral and Brain Sciences. https://doi.org/10.31234/osf.io/5yfmq

Moshontz, H., Ebersole, C. R., Weston, S. J., & Klein, R. A. (2021). A guide for many authors: Writing manuscripts in large collaborations. Social and Personality Psychology Compass, 15(4), e12590. https://doi.org/10.1111/spc3.12590

Pedersen, C. L.

(2022). How to pick a great scientific collaborator. Nature. https://doi.org/10.1038/d41586-022-01323-9

Rivas-Drake, D., Camacho, T. C., & Guillaume, C. (2016). Just good developmental science: Trust, identity, and responsibility in ethnic minority recruitment and retention. Advances in Child Development and Behavior, 50, 161-188. https://doi.org/10.1016/bs.acdb.2015.11.002